记录一次Go HTTP Client TIME_WAIT的优化

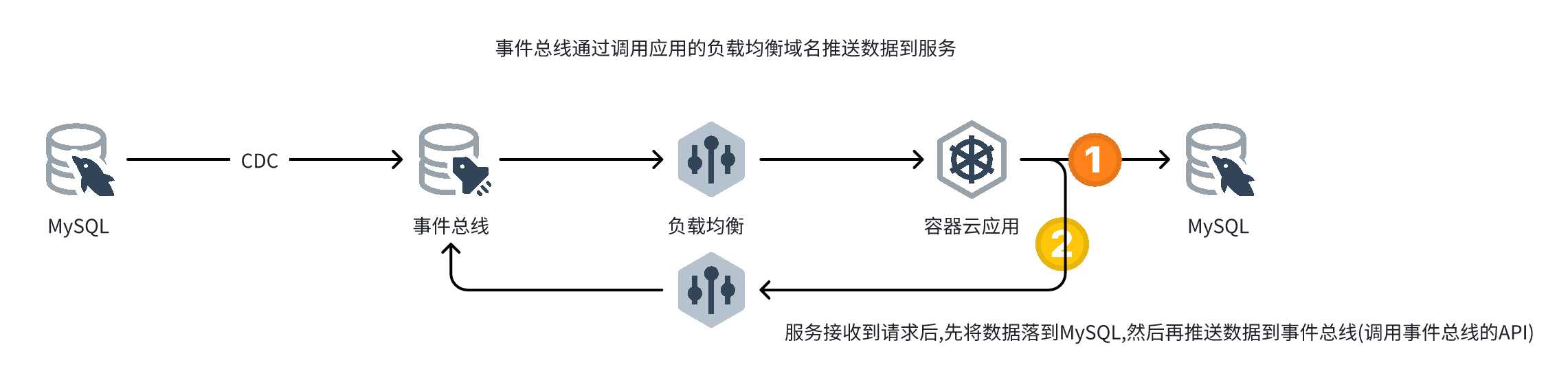

业务流程

分析 通过容器监控发现服务到事件总线的负载均衡之间有大量的短链接,回看一下代码

发送请求的代码

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 func SendToKEvent(ev *KEvent) error { data, err := json.Marshal(ev.Data) if err != nil { return err } log.Println(string(data)) if !sendEvent { log.Println("------ SEND_EVENT IS DISABLED ------") return nil } defer util.TimeCost("SendToKEvent")() body := bytes.NewReader(data) ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second) defer cancel() req, err := http.NewRequest(http.MethodPost, ev.Url, body) if err != nil { return err } req.WithContext(ctx) req.Header.Set("Content-Type", "application/json; charset=utf-8") req.Header.Set("......", "......") for k, v := range ev.ExtMap { req.Header.Set(k, v) } resp, err := httpc.HttpClient.Do(req) if err != nil { return err } defer resp.Body.Close() // 事件总线 2xx 均为正常 if resp.StatusCode >= 300 || resp.StatusCode < 200 { return fmt.Errorf("req failed, resp=%v", resp) } return nil }

http client的代码

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 var ( HttpClient = &http.Client{ Transport: &http.Transport{ Proxy: http.ProxyFromEnvironment, DialContext: func(ctx context.Context, network, addr string) (conn net.Conn, e error) { return (&net.Dialer{ Timeout: 10 * time.Second, KeepAlive: 90 * time.Second, }).DialContext(ctx, network, addr) }, ForceAttemptHTTP2: true, TLSHandshakeTimeout: 5 * time.Second, ResponseHeaderTimeout: 30 * time.Second, MaxIdleConnsPerHost: 10, IdleConnTimeout: 90 * time.Second, ExpectContinueTimeout: 1 * time.Second, }, } )

代码看起来没啥问题,但想到了之前处理过Golang ES client的一个问题

https://jiankunking.com/tcp-state-diagram.html

看下上文中TIME_WAIT部分,发现还真是https://pkg.go.dev/net/http#Response

1 2 3 4 5 6 // The http Client and Transport guarantee that Body is always // non-nil, even on responses without a body or responses with // a zero-length body. It is the caller's responsibility to // close Body. The default HTTP client's Transport may not // reuse HTTP/1.x "keep-alive" TCP connections if the Body is // not read to completion and closed.

调整代码

1 2 3 4 5 6 7 8 9 10 11 func SendToKEvent(ev *KEvent) error { ...... resp, err := httpc.HttpClient.Do(req) if err != nil { return err } defer resp.Body.Close() io.Copy(ioutil.Discard, resp.Body) // <-- 添加这一行 ...... return nil }

重新部署后,发现TIME_WAIT的链接少了很多,但还是有10几个

1 2 3 4 5 6 7 8 9 10 11 12 13 14 bash-5.0# netstat -anp |grep TIME tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:10964 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:45738 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:21178 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:37354 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:10966 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:37352 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:61524 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:61526 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:21180 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:33256 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:45736 TIME_WAIT - tcp 0 0 ::ffff:172.16.3.247:8080 ::ffff:10.200.76.64:33254 TIME_WAIT - bash-5.0#

这里需要注意一下

172.16.3.247是服务POD的ip

10.200.76.64是POD所在宿主机的ip

也就是说POD跟宿主机之间有短链接,那这几个短链接是在做啥呢?

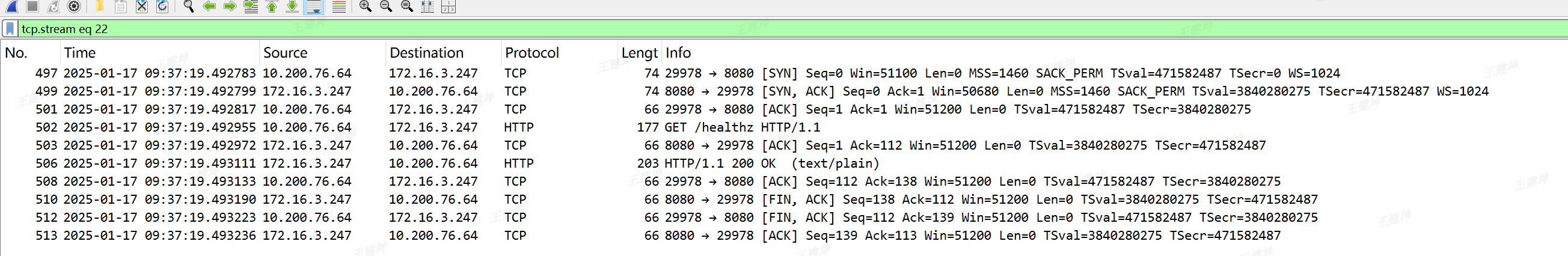

抓包看下

着重看一下No 502这一行

1 2 3 4 5 6 7 8 9 10 11 12 13 Frame 502: 177 bytes on wire (1416 bits), 177 bytes captured (1416 bits) Ethernet II, Src: ee:ee:ee:ee:ee:ee (ee:ee:ee:ee:ee:ee), Dst: b6:cd:6a:f8:69:5e (b6:cd:6a:f8:69:5e) Internet Protocol Version 4, Src: 10.200.76.64, Dst: 172.16.3.247 Transmission Control Protocol, Src Port: 29978, Dst Port: 8080, Seq: 1, Ack: 1, Len: 111 Hypertext Transfer Protocol GET /healthz HTTP/1.1\r\n <-- 注意这一行,这个接口是服务配置的存活检查接口 Host: 172.16.3.247:8080\r\n User-Agent: kube-probe/1.21\r\n Accept: */*\r\n Connection: close\r\n <-- 注意这一行 \r\n [Response in frame: 506] [Full request URI: http://172.16.3.247:8080/healthz]

Connection

Connection: keep-alive 当一个网页打开完成后,客户端和服务器之间用于传输HTTP数据的TCP连接不会关闭,如果客户端再次访问这个服务器上的网页,会继续使用这一条已经建立的连接Connection: close 代表一个Request完成后,客户端和服务器之间用于传输HTTP数据的TCP连接会关闭, 当客户端再次发送Request,需要重新建立TCP连接。

从Connection的注释可以看出当请求header中带有Connection: keep-alive表明该请求是会是一个短链接。

看下服务的Deployment的配置

1 2 3 4 5 6 7 8 9 livenessProbe: failureThreshold: 3 httpGet: path: /healthz port: 8080 scheme: HTTP periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1

到这里问题都可以解释的通了,Kubernetes会每10秒请求一次服务的存活检查的接口,每一次都是短链接,而TIME_WAIT的默认值是120s。

那服务TIME_WAIT的链接应该会一直保持在11-13个左右。

到这里所有的问题都就可以解释了。

结论

Go HTTP Client请求完了,即使业务不关注响应的Body,还是要在代码中read一下body。

只要服务配置了存活检查就会有短链接,短链接的数据取决于检查间隔时间的配置。

拓展阅读